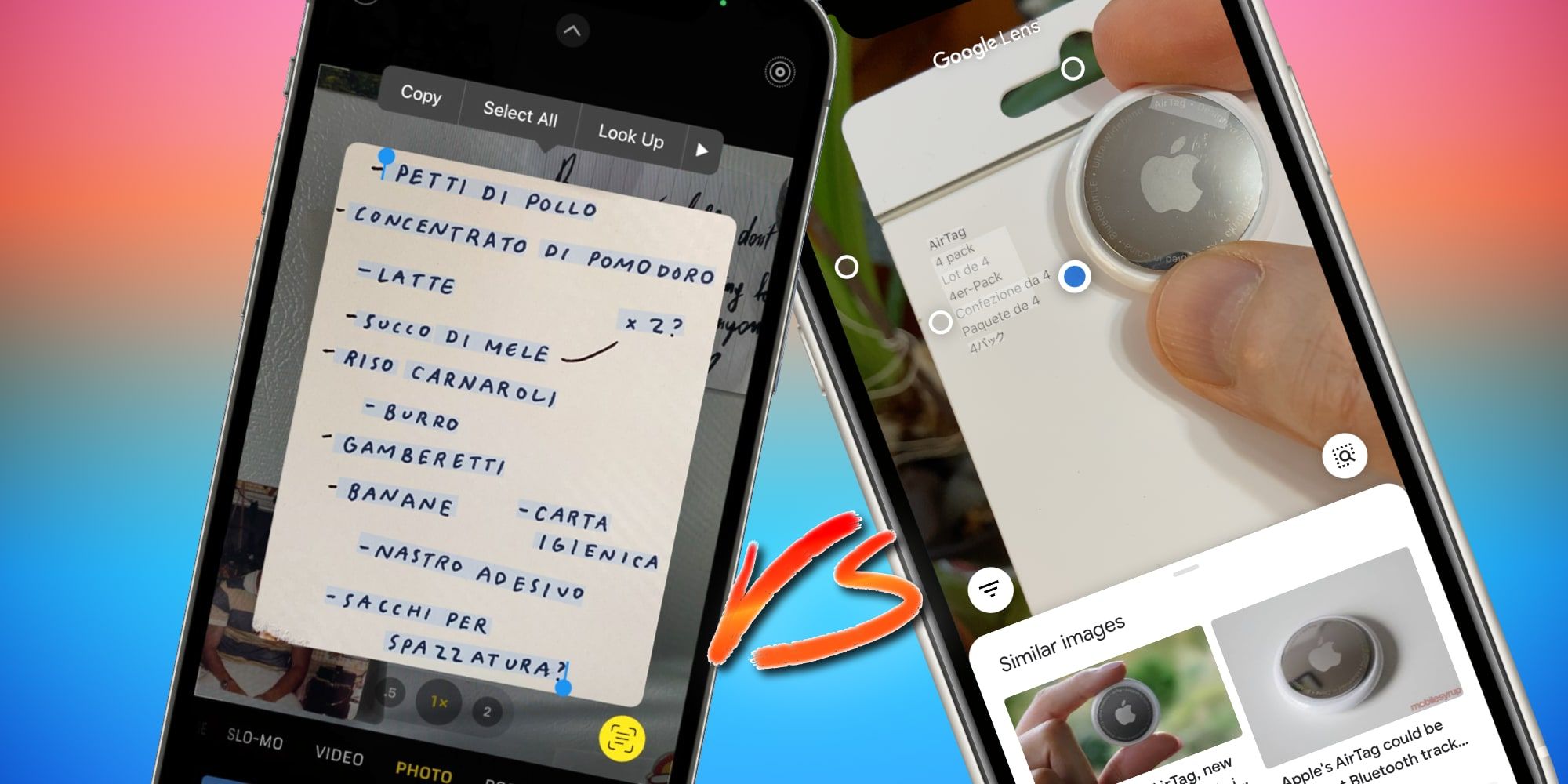

Apple just recently revealed its brand-new Live Text function for iPhone, which is extremely comparable to Google Lens. While Lens has actually remained in active advancement for a number of years now, Apple’s service is presently in designer beta screening and will not formally release up until the iOS 15 upgrade gets here later on this year. Mac computer systems and laptop computers that can run macOS Monterey and any iPad that works with iPadOS 15 will be getting these exact same text and item acknowledgment functions.

Google Lens initially appeared with the business’s Pixel 2 mobile phone in 2017. Text acknowledgment software application existed long prior to that, however with Google’s application, this ability was integrated with Google Assistant and the business’s online search engine abilities. Actions based upon the text discovered in pictures and live video camera views are more effective than just drawing out the text from an image as previous OCR (optical character acknowledgment) software application did. Object acknowledgment includes another layer of intricacy, recognizing individuals and animals for usage in is online image storage service, Google Photos. Apple boosted its Photos app with comparable item acknowledgment for the function of arranging images, however there was no genuine obstacle to Google Lens till Live Text was revealed .

Related: Why Google Photos Is Still The Best Video Storage App For iPhone Users

With the upcoming iOS 15 upgrade, Apple’s Live Text quickly determines any text in a picture, making every word, sign, number, and letter selectable, searchable, and offered for numerous actions. Any part or all of the words in a picture can be equated, e-mail addresses and phone numbers end up being links that introduce the Mail and Phone apps when tapping or clicking them. Spotlight search will likewise have the ability to discover images based upon the text and things in the images, making recuperating details or finding a specific image a lot easier for those with big libraries. These are helpful and really interesting additions to Apple gadgets however, in reality, iPhone and iPad users have actually had access to comparable abilities because 2017 when Google included Lens to its iOS search app. Google Photos is likewise offered on Apple gadgets, suggesting browsing for text and items works there. The contrast of functions is really close and Apple still has numerous months prior to the main launch. For owners of suitable Apple gadgets , the Live Text functions will offer abilities that have actually ended up being typical on lots of Android phones.

Apple’’ s brand-new Live Text and Visual Lookup functions need a reasonably brand-new iPhone or iPad with an A12 processor or more recent. For Mac computer systems, just the most recent designs that utilize Apple’s M1 chip work. The Intel-based computer systems, even those less than a years of age will not have access to Live Text. Google Lens and Google Photos work their magic on a much broader range of mobile gadgets and computer systems. Lens was at first just offered on Pixel phones , then broadened to a couple of other Android phones and Chrome OS gadgets. Most Android gadgets got gain access to through Photos. Through a web browser, Windows, Mac, and Linux computer systems can utilize Google’s robust set of image acknowledgment functions, with Lens revealing as a choice when text is acknowledged in a picture. This implies Google Lens is offered for nearly every current gadget from any producer. Google has far more experience with image acknowledgment, having actually introduced Lens a number of years prior to Apple’’ s Live Text. This likewise indicates Google Lens will likely be more precise and have more functions in general. Considering that Live Text hasn’’ t been provided to the general public yet, more functions might be included and compatibility may grow in time, simply as it finished with Google Lens.

The factor Apple just made Live Text offered to current internal processors is due to the fact that of its devotion to personal privacy . While Google Lens needs an active web connection, Apple’s Live Text and Visual Lookup do not, with the processing utilizing the AI abilities of the chips constructed into an iPhone, iPad, or M1 Mac. When utilizing a sluggish web connection, this may bring much better efficiency versus Google. Apple might have composed the code to perform Live Text on Intel processors given that a Mac Pro uses much greater efficiency than an M1 Mac. Apple is transitioning away from Intel and is less most likely to compose complex algorithms that rely on specialized hardware for those older systems. This is bad news for those that bought an Intel Mac in 2015, however isn’t truly a surprise. For Apple gadgets that aren’t suitable, Google has a great service for text acknowledgment, translation and more, when utilizing Google Photos and the Google search mobile app. For owners of the M1 Mac and current iPhone designs, Apple’s Live Text offers a privacy-focused response that incorporates much better with Apple’s Photos app and the whole Apple community.

Next: How To Add Google Lens, Translate, &&Search iOS Widget To iPhone

.

Read more: screenrant.com